The evolution toward DevOps

The first computer program dates back to 1843 and was an algorithm intended to calculate a sequence of Bernoulli numbers. It wasn’t until 1949 that computer programs and their data could be stored in computer memory. It took almost a century for the hardware technology to provide a mature enough platform to support computer programming effectively.

Computer hardware without software is just a bunch of electronics, whereas software without hardware is text or binary data that cannot be interpreted. They are dependent on each other to provide a usable product. This is an important distinction to make between hardware and software when it comes to creating and running computer programs.

When a software developer writes a new program, it must run on a piece of hardware. The developer can also maintain the hardware on a small scale, e.g., a hobbyist programmer writing some code at home.

When things start to scale, let’s say for a small business, the software developer can keep the few on-premise servers updated and install the new software versions every time there is an update. However, once the company grows, a single person can no longer keep the software up-to-date. The deployment process is becoming increasingly complex, and when there is an outage, the software developer could fall into the trap of debugging or implementing hotfixes directly on the production system. This makes deploying new changes and maintaining an operational computer system complex, especially to repeat safely and consistently.

In larger organizations, IT operations were introduced to take over the deployment and maintenance aspects of the system. This gave the software developers some breathing room to focus entirely on writing and creating quality software. They would hand over new software versions to the IT Operations, who would then deploy and maintain the latest software on the organization’s infrastructure.

The separation of Development and IT Operations was a great answer to solving the scalability and maintainability challenge of an organization’s IT infrastructure. However, as these systems became more complex, the separation also experienced limitations when it came to providing a high level of throughput (new software) and stability (mean time to recover). Proposals to address this limitation in the form of merging development and operations methodologies started in the late 80s to 90s, and by 2009, the first DevOps conference was held.

The DevOps methodology

The DevOps methodology aims to optimize the systems development life cycle by integrating software development (Dev) and IT operations (Ops) work.

Many enterprises, which still have separate IT operations and software development teams, only release new software quarterly, yearly, or even once every two years due to the significant manual effort and collaboration required during such a release process. In contrast, enterprises employing DevOps principles can quickly deploy multiple features daily.

The Amazon DevOps story

Let’s use Amazon’s Continuous improvement and software automation story as a case study. Amazon realized that it took approximately 14 days to go from idea to production (which is already fast compared to other companies), of which only about 1 to 2 days were spent turning the idea into peer-reviewed code. They invested in improving their Continuous Integration / Continuous Delivery (CI/CD) pipeline and achieved a staggering 90% reduction in time from check-in to production.

The adoption of this automated pipeline within Amazon increased when people saw the successes of the teams starting to use it. The adoption within diverse teams also led to various teams finding their own solutions to common problems experienced by other teams as well. Amazon learned that building knowledge messages into their deployment tools helped share best practices with the users of these tools. This, in turn, enabled higher adoption of best practices.

Another big lesson learned with the increased adoption was that automating a release process to improve the frequency of releases wasn’t the only significant performance metric. Stability was also important, and mitigating the risk of introducing bugs into production required sufficient testing at all stages of the deployment. Their CI/CD pipeline incorporated testing through the following stages:

- Unit testing on the build machine, including style checks, code coverage, etc.

- Integration testing includes automated testing with external interfaces, e.g., browsers, failure injections, security checks, etc.

- Pre-production testing, which tests the artifact in the operational environment. These technical tests verify that the service can connect to all production services, but at this point, the artifacts still need to be exposed to production inbound traffic.

- Validation in production means that the new software is released to only a few customers and, based on validation checks, may then be rolled out to all customers or rolled back.

These testing processes mitigate risk, but it is also essential to have absolute control of the actual release process. This is done through significant monitoring of various metrics and control options. The control options provide a risk barrier to moving from Development to Production. The controlled release is where the development cycle transitions to the operational cycle.

The DevOps cycle

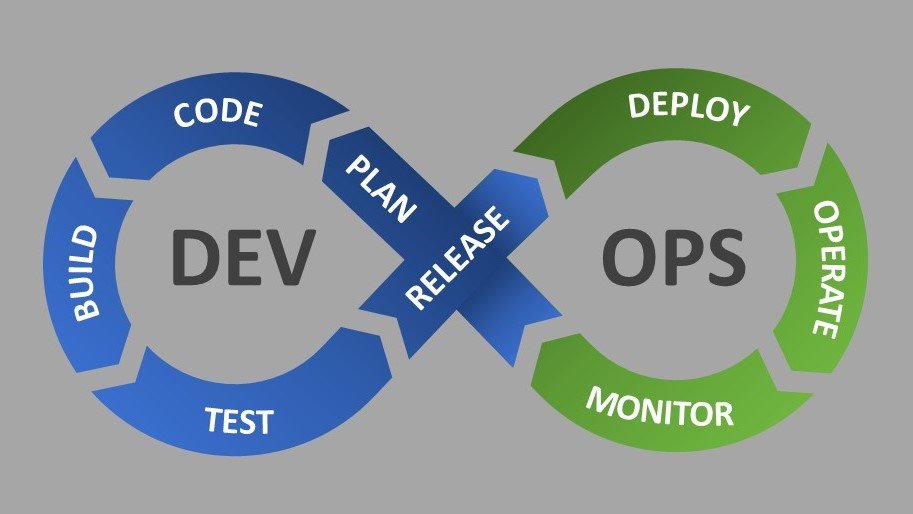

The DevOps “infinity” cycle integrates and visualizes the Development and the IT Operations cycle. Referencing this cycle is a great way to think about this form of a systems development lifecycle.

The cycle is explained on a high level as follows:

- Based on feedback from operations, one can plan for new releases.

- The plans or ideas are converted to code by a development team.

- The new code is built on a build machine and creates an artifact. This is also where unit testing is done.

- The artifact is then submitted for automated integration testing.

- After successful testing, the artifact can be scheduled for release into the production environment using the appropriate “risk barrier” controls.

- Once the artifact is submitted for release in the production environment, the released artifact is deployed based on the system’s infrastructure configuration.

- The artifact is now operational and can deliver services to its users.

- The operational service must be monitored. This monitoring provides IT Operations with the necessary input for feedback to the development team. The feedback is input to plan new features and bug fixes.

Various tools are made specifically for each step in the process. Some even have the functionality for the entire cycle. Here is a concise list of tools available at the time of writing this post to give you an idea:

- Plan: Jira, Asana

- Code: Git, IDEs

- Build: Jenkins, Bamboo

- Test: Robot Framework

- Release: Jenkins, Bamboo, Kubernetes

- Deploy: Kubernetes, Puppet, Ansible, Chef

- Operate: Depends on your app, e.g., a web server might use nginx

- Monitor: Nagios, Zabbix, Prometheus, Grafana, Kibana

You might be thinking, I understand the DevOps process, but at our company, we don’t use most or any of the tools above, and we can, therefore, not go “full DevOps.” That is where the DevOps mindset comes in.

The DevOps mindset

So many companies and people use software that it is impossible to have a one-size-fits-all approach. Newer companies/products/projects can adopt DevOps practices from the start, but:

- How do you change your company’s legacy infrastructure and processes with the ability to produce only an annual software release to a monthly, weekly, or even daily release?

- How do you ensure reproducibility and stability and be resilient against team changes?

It starts with developing a DevOps culture, which will undoubtedly take time. It took Amazon almost a decade to get this right, and they are still refining the process today. A way to start working on a DevOps culture is to show the benefits to the organization, start small, and lay down fundamental principles such as the four key principles employed by GitLab:

- Automation of the software development lifecycle

- Collaboration and communication

- Continuous improvement and minimization of waste

- Hyperfocus on user needs with short feedback loops.

The benefit of laying down fundamental principles is that it can guide Development and IT Operations teams in forming a DevOps practice that works within the organization and its context. Architectural decisions for development and operations must consider automation and prepare for a streamlined approach. In the end, it should be a team effort.

Summary

Software needs to run on hardware, which becomes complex at scale. The evolution of maintaining the software and operational hardware systems led to the formation of separate development and IT operations teams.

This dividing line between the operational systems and the development processes solved the scaling problem. Still, they experienced challenges regarding faster delivery of new features, bug fixes, and failure recovery. DevOps provided a solution by enabling scalability, speedier delivery, and recovery.

The DevOps methodology aims to optimize the systems development life cycle by integrating software development (Dev) and IT operations (Ops) work. It focuses on:

- automation of manual tasks,

- mitigating risk through automated testing,

- implementing release controls,

- reproducible (configurable) infrastructure,

- and feedback loops from Ops to Dev based on the monitoring results.

Finally, DevOps has significant advantages for an organization but must be adopted by developing a DevOps mindset (culture). This culture will ultimately enable the organization to find a DevOps solution suitable for its development and IT operations.

Leave a Reply